Assuring the quality and performance of the SQL in Db2® for z/OS® applications is of paramount importance. Indeed, monitoring and improving SQL performance is one of the key duties of Db2 DBAs. Applications with high quality, optimal SQL is essential because it directly impacts the performance, scalability, and cost-effectiveness of database-driven applications. When SQL queries are well-written and efficient, they execute quickly, consume fewer resources, and reduce the load on the database server. This results in faster response times for end-users and ensures that the application remains responsive and efficient, even as data volumes grow.

In high-transaction environments, such as financial services, healthcare, and e-commerce, optimal SQL can make the difference between meeting critical SLAs and experiencing delays that may impact business operations or customer satisfaction.

Optimal SQL also plays a key role in cost management, especially in environments where resources are billed based on usage. Poorly optimized SQL can lead to unnecessary table scans, excessive CPU usage, and higher memory consumption, increasing infrastructure costs. Additionally, high-quality SQL contributes to database stability by minimizing contention for resources, reducing the risk of deadlocks, and preventing potential performance bottlenecks. In the long term, this allows the database to scale effectively without significant hardware upgrades or costly performance-tuning efforts.

Dynamic and Static SQL

In Db2 for z/OS environments, both dynamic and static SQL are used in applications. Static SQL is pre-compiled at the time of application development, meaning that the access path to the data is determined during the compile and bind phases. This process provides stability and performance predictability since the SQL statements are optimized beforehand. With static SQL, Db2 generates an access path and “binds” it into a package, which can be reused without recalculating access paths for each execution. This characteristic makes static SQL especially beneficial for high-volume transactional applications, where predictable response times and minimized CPU usage are crucial.

On the other hand, dynamic SQL is compiled and optimized at runtime, meaning that access paths are determined only when the SQL statement is executed. This flexibility allows applications to handle unpredictable workloads and dynamic user inputs, as queries can be generated on-the-fly. Dynamic SQL is ideal for applications where query structures change frequently, such as ad-hoc reporting or decision-support systems. Although dynamic SQL generally incurs higher CPU costs due to the need for runtime optimization, it allows for greater flexibility in adapting to varying queries and workloads.

Most modern applications rely on dynamic SQL more than static SQL however, both dynamic and static SQL are essential to Db2 for z/OS because they address different performance and functionality requirements. Static SQL is crucial for predictable, high-performance transactions in production environments, helping reduce CPU usage and stabilize application behavior. Meanwhile, dynamic SQL provides the adaptability required for interactive and complex queries where pre-compilation is not feasible. The balance between the two often depends on the nature of the application, workload predictability, and performance considerations, making it essential for Db2 for z/OS environments to support both types effectively.

The bottom line though, is that DBAs are required to understand, tune, and optimize both static and dynamic SQL in Db2 applications.

Optimization Challenges

Monitoring and tuning both static and dynamic SQL in Db2 for z/OS applications present unique challenges due to the distinct nature of each type of SQL. For static SQL, the primary challenge lies in maintaining optimized performance over time. Since static SQL statements are pre-compiled, the access path chosen at the time of the initial bind remains fixed until the application is recompiled or re-bound. As data volumes grow and database structures evolve, these pre-defined access paths may become suboptimal, resulting in performance degradation. This requires DBAs to periodically re-evaluate and re-bind static SQL, which can be labor-intensive, especially in large applications with numerous packages.

Another challenge with static SQL is ensuring that any tuning changes do not inadvertently impact performance elsewhere, especially in production environments. Identifying and analyzing poor-performing static SQL statements often requires the use of specialized tools to gather and interpret metrics, such as buffer pool usage, CPU time, and I/O statistics, to pinpoint issues accurately.

For dynamic SQL, the primary challenge is the unpredictability and variability of runtime queries. Unlike static SQL, dynamic SQL statements are optimized at runtime, meaning that access paths are determined based on the current environment, data distribution, and query parameters. This flexibility, while powerful, can lead to inconsistent performance. The real-time optimization process for dynamic SQL also can consume additional CPU, adding overhead that can become costly in high-transaction environments.

Dynamic SQL performance tuning requires constant monitoring of execution patterns and access paths, often using tools that can capture and analyze SQL statements as they are generated. One key challenge here is capturing queries that change with user inputs or application logic. DBAs must monitor the SQL cache, identify high-resource-consuming queries, and determine if any repetitive, resource-intensive patterns emerge. Tuning dynamic SQL often involves working with the application team to modify queries, adjust indexes, or even consider static alternatives if performance demands stability.

Overall, monitoring and tuning both static and dynamic SQL in Db2 for z/OS is an ongoing process that requires tools, expertise, and a deep understanding of workload patterns. Addressing the unique challenges of each type helps ensure optimal database performance, supporting both high-throughput transactional systems and flexible, user-driven queries.

Infotel DB/IQ QA+

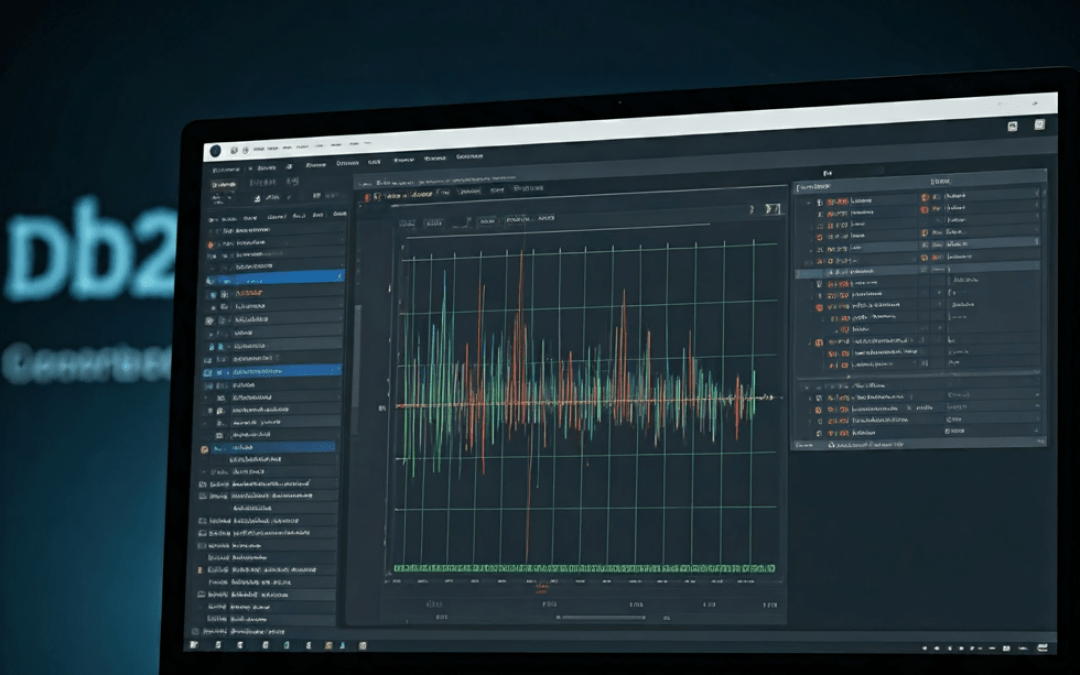

A particularly useful tool for monitoring and improving the quality of your Db2 for z/OS SQL is DB/IQ QA Plus from Infotel Corp.

DB/IQ QA can be used to ensure data consistency, accuracy, and compliance, particularly in high-stakes environments where data integrity is critical. Traditional data quality methods often rely on manual checks and fragmented tools, which are time-consuming and prone to error. DB/IQ QA integrates directly with Db2, allowing for streamlined and comprehensive data validation, reducing operational costs and the likelihood of undetected data issues.

The tool enables monitoring and optimization of both static and dynamic SQL by performing quality assurance on SQL, using a set of more than 350 rules, delivered as a standard, but which can be modified and enhanced by DBAs. No expensive Db2 trace is required. For dynamic SQL, DB/IQ QA+ monitors all activities in the Db2 Statement Cache and reports on vital information, highlighting performance problems and heavy consumers.

By reviewing troublesome SQL and applying optimization rules, the quality and performance of the SQL – both dynamic and static – in your Db2 applications can be consistently reviewed and improved.

By automating key quality assurance processes, DB/IQ QA frees up resources, allowing DBAs and IT teams to focus on strategic initiatives rather than repetitive tasks. The tool is built to monitor and enforce business rules consistently, ensuring regulatory compliance and fostering trust in organizational data. Additionally, DB/IQ QA offers enhanced reporting and analytics capabilities that allow stakeholders to gain insights into data quality trends, helping them make informed decisions and continuously improve their data governance practices.

By Craig S. Mullins